The 2010s were a decade that saw huge developments in the field of artificial intelligence, with machines being built with the capacity to teach themselves how to play (and win at) strategy-based board games, take a stroll through the woods, recognize human faces, make medical diagnoses, and even drive a car. But some experts are warning that the field of AI development may be on the verge of stalling, the victim of an over-hyped industry that has promised more than it might be able to deliver in the short term.

Through the decades, the development of artificial intelligence has seen peaks and troughs—within the field, periods of rapid advancement are referred to as “AI summers”, while slowdowns are called “AI winters”—and the 2010s would appear to have borne some of the biggest advancements in machine intelligence history. But the industry appears to be on the eve of a slowdown in development, at least compared to the leaps and bounds made in over last few years.

Sometimes referred to as one of the “godfathers of AI”, computer scientist Yoshua Bengio says that AI’s capabilities were somewhat overhyped by the companies that developed them, in the interest of promoting their developments, and that this hype might be starting to cool off.

“By the end of the decade there was a growing realization that current techniques can only carry us so far,” observes Gary Marcus, an AI researcher at New York University.

Leaders in the field are hesitant to label the pending slowdown as a full-on AI winter, but prefer to refer to it as an “AI autumn”; given the billions of dollars being put into research, they expect the pace of the development of machine intelligence to plateau, rather than outright stall.

But what is behind the cooling of the season for AI? The decade’s lineup of intelligent machines is commonly referred to as “AI”—artificial intelligence—but AI itself is still stereotypically machine-like: it can do what it was designed to do really well, but that’s all that that one program (or device) can do. The holy grail of AI is what’s known as “AGI”, or “artificial general intelligence”, and as the name implies, it would be an intelligence that can grow beyond the narrow specialization that it was designed for, in a proper mimicry, if not an outright emulation, of human-like intelligence.

For example, take the capabilities of DeepMind’s AlphaGo board game-playing AI program: in 2016, AlphaZero bested human Go champion Lee Sedol at his own game, in 4 out of 5 rounds. After demonstrating the capability to creatively design its own strategies to beat its human opponent at the ancient Chinese game, AlphaGo’s successor, AlphaZero, went on to teach itself how to play not only Go, but also chess and shogi.

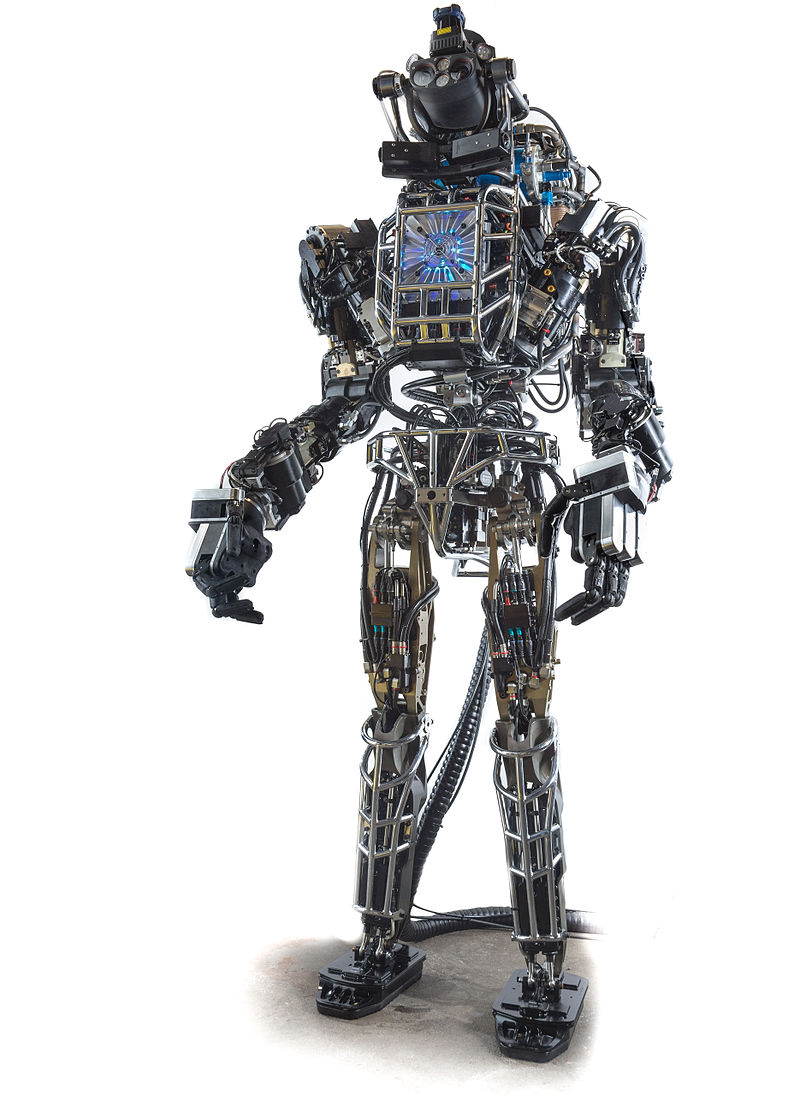

But AlphaGo and AlphaZero would be useless if tasked with other comparatively simple tasks like taking a stroll through the woods, as Boston Dynamics’ Atlas has become adept at; conversely, as good at basic parkour as Atlas is, the robot wouldn’t be even remotely capable of playing any of the games that its more strategic counterparts—programs that require a supercomputer to run—now routinely master. Each system was designed to do one thing well, but only that one thing.

AGI, on the other hand, would conceivably be able to learn how to walk down the street to a nearby chess tournament, participate in a game, read the facial expressions of its opponent, and carry on a light conversation. While each of these capabilities are within the realm of today’s AI, each task is typically performed by a single program, one that almost always requires significant computer resources to accomplish its task. And it is that steep computational requirement that is preventing AI from becoming truly intelligent in the general context that it needs if it is to make any meaningful progress.

“The field has come a very long way in the past decade, but we are very much aware that we still have far to go in scientific and technological advances to make machines truly intelligent,” according to Facebook AI Research group researcher Edward Grefenstette.

“One of the biggest challenges is to develop methods that are much more efficient in terms of the data and compute power required to learn to solve a problem well,” Grefenstette continues. “In the past decade, we’ve seen impressive advances made by increasing the scale of data and computation available, but that’s not appropriate or scalable for every problem.”

“If we want to scale to more complex behaviour, we need to do better with less data, and we need to generalise more.”

Perhaps, in addition to the proposed efficiencies proposed by Grefenstette, an entirely new approach to computer architecture, like quantum computers or neuromorphic circuits, could provide the innovative boost the AI field needs to draw itself out of the impending stall threatening the pace of progress?

But what can we expect to see from AI development if this AI autumn does come to pass? “In the next decade, I hope we’ll see a more measured, realistic view of AI’s capability, rather than the hype we’ve seen so far,” posits Catherine Breslin, an ex-Amazon AI researcher. Indeed, a lot of the hype seen in the 2010s could be avoided if individual learning programs were to be taken out from under the umbrella of the term “AI”: currently used by companies as a catch-all buzzword for marketing purposes, “artificial intelligence” is a term that is somewhat misleading when used to describe current “intelligent” algorithms.

“The manifold of things which were lumped into the term “AI” will be recognised and discussed separately,” forecasts Samim Winiger, a former AI researcher at Google in Berlin.

“What we called ‘AI’ or ‘machine learning’ during the past 10-20 years, will be seen as just yet another form of ‘computation'”

Subscribers, to watch the subscriber version of the video, first log in then click on Dreamland Subscriber-Only Video Podcast link.

I read this article on https://grahamhancock.com/

Scientists use stem cells from frogs to build first living robots…….“These are very small, but ultimately the plan is to make them to scale,” said Levin. Xenobots might be built with blood vessels, nervous systems and sensory cells, to form rudimentary eyes. By building them out of mammalian cells, they could live on dry land.

https://www.theguardian.com/science/2020/jan/13/scientists-use-stem-cells-from-frogs-to-build-first-living-robots

You often end up being caught by the spam filter, Carollee. Why, we don’t know. So there’s going to sometimes be a delay on your posts because we have to repost them manually.

Thank you for taking the time to repost them manually. I wonder why some of my posts end up in the spam filter?

No robots in me thanks

It is stalling because humans don’t understand consciousness.

Consciousness is literally and potentially connected to everything. This literal connection can overtly manifest, with the application of awareness. Awareness is the difference between the connection being inert, unconscious and in the background, or active, conscious, and in the foreground. Until AI is connected to everything, it will lack resources and ultimately comprehension. Humans explore levels of consciousness, particularly in dreams—subtle directional hints take us on unique trajectories. An AI might be fashioned that can explore and examine consciousness (a final frontier, as it were) by assisting in the teasing open of previously unopened avenues and bringing them into awareness. The machines might be adept at discovering possible inroads in the formative moments of a thought or group of thoughts, by using their powers of calculation. This would be a beneficial use of AI for machine/human interaction and learning, machines could help humans access latent levels of consciousness. Reliance upon machines as the resource for knowledge is doomed to stagnation, it can only proceed so far. Until humans understand that consciousness is connected to everything, AI development will falter. It is also possible that machines could be outfitted with and plugged into a type of consciousness, if that consciousness is willing. It would then have the capabilities of machine and spirit.

Is asking questions a key to generalized artificial intelligence? Not just preprogrammed questions with deterministic answers, but the ability to ask questions about anything and everything and adapt algorithms and behaviors according to the information gathered in seeking the answers.

Seems to lend thought to the observance of Visitors etc that seem machine like. Perhaps we ourselves attached to AI would bring about full on AGI? Mason seems so in-tuned to this.

The visitors may have computers that contain spiritual components. A marriage of machine and consciousness has definite advantages compared to machines alone. It is possible machines have a dim consciousness, but revealing and enhancing it seems to be problematic, difficult and clumsy. A commingling of machine and consciousness would precipitate development of AI, acting as a catalyst. It is possible spirits are already inhabiting some of the synthetic machines that have been constructed (the human body is a type of machine, after all), although these spirits are probably on a rudimentary level, existing close to the sophistication of the design.

My god Whitley every time I read an update on AI and/or experiments using biological material for computing I think of predictions from “Master of the Key”. Except for the potential for a new ice age most everything else is playing out as distinct possibilities or current reality.

Consciousness is analog, not digital. Once the significance of that fact is accepted in university AI graduate programs and in research institutions, then progress in the development of conscious machines will begin to occur. Currently, the most effective modeling of complex systems is in analog weather software, housed in supercomputers.

Consciousness is best modeled by waves, waves that intersect and interpenetrate each other. Consciousness is also able to gather waves from novel resources and intermingle waves so emergent, nascent waves manifest. The place where all waves converge is an endless resource and center of perfect balance. Time has ceased to transpire there, and instantaneous transmission and communication of energy can occur there. If you look at the consciousness contained in the brain, there is a lot of free correspondence and mingling via emotions, neurochemicals, and impulses.